WE rank almost everything. The top 10 restaurants in our vicinity, the best cities to visit, the best movies to watch. To understand whether the rankings were any good you’d want to know who was doing the ranking. And what it was they were looking for.

These are exactly the same questions that are worth asking when looking at the international ranking of universities.

Ranking universities started a couple of decades ago. Since then they have become omnipresent, presuming importance and validity. Institutions, especially those ranked highly, take them seriously. Some allocate staff time to collate the data that rankers ask for. University donors take them seriously, journalists popularise them and some parents use them to choose where their children should study.

There are many university ranking systems and many rankers. Some ranking systems are better known than others. Those that get the most coverage in the media and seem to be most influential are Quacquarelli Symonds (QS), Times Higher Education (THE), Shanghai Ranking Consultancy and US News & World Report.

A group of experts recently got together to look critically at ranking systems. (I was among them.) We were convened by the United Nations University International Institute for Global Health, which issued a press release on the report.

We concluded, firstly, that there is a conceptual problem with rankings. It’s not sensible to put all institutions in one basket and come up with something useful.

We also concluded that their methods are unclear and some seem to be invalid. We would not accept research with poor methods for publication yet somehow rankers can get away with sloppy methods.

The experts noted that rankings were massively overvalued, and reinforced global, regional and national inequalities. And, lastly, that too much attention to ranking inhibited thinking about education systems as a whole.

Who does the ranking, and how

Institutions doing the rankings are private for-profit companies. Ranking agencies make money in various ways: by harvesting data from universities which they subsequently commodify; selling advertising space; selling consultancy services (to universities as well as governments); and running fee-paying conferences.

Each ranker and each ranking has a different approach. They all ultimately generate an index or score from the data they collect. But it is not clear how they come up with their scores. They are not completely transparent about what they measure and how much each component of the measure counts.

For example, Times Higher Education sends out a survey to academics who are invited to rate their own – or other – institutions. This measure will be influenced by how many people respond to who the people are and their ability to actually know anything about the institution they are ranking.

So it is easy to get a number out of such a survey, but is it valid? Does it reflect reality? Is it free of bias? If I work at a particular institution is it possible and perhaps even likely that I will rate it highly? Or, if I am unhappy at that institution, I may want to rate it poorly. Either way, it is not a good measure of reality.

Ranking institutions use other measures that may be considered more objective. For example, they look at publications that universities produce.

Firstly, a lot of research has shown that what gets published and who gets published is itself biased. In addition when you look at it closely the ranking institutions pay more attention to particular kinds of research – science, technology, engineering and maths. They don’t count everything, they don’t count it equally and they don’t tell people who get the rankings how they have weighted what they do count.

Why should we care?

Universities have many roles to play in society. In addition, a lot of public money goes into universities. We, the public, should care how that money is spent.

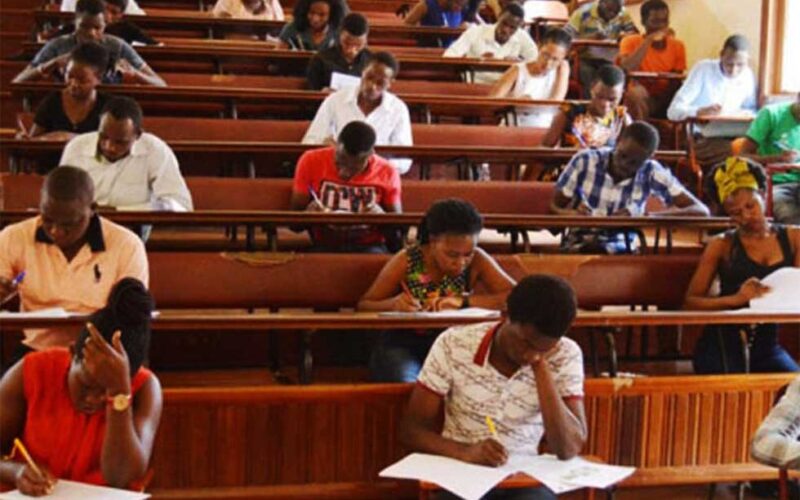

What if a university is graduating lots of students who are filling important roles in society, like staffing schools, hospitals and the civil service with staff who are good at their jobs? That is a good university; it is fulfilling an important social function. It seems to be a good place for tax money to go.

What if another university is doing research that results in good public policy that helps governments implement programmes that decrease unemployment among youth or decrease crime? That is a good university.

Both institutions may not have a high ranking. But it’s hard to say that they are not good universities.

Single-ranking systems don’t serve society. Rankings, if they are taken too seriously they are allowed to influence the higher education system, which can undermine what a higher education system should be doing, which is contributing to a better society.

Too much attention to these rankings inhibits thinking about education systems as a whole. There are so many important questions to be asked about a higher education system.

The questions that should be taking precedence are:

- Do we have the right mix of tertiary education institutions and do they fit together well?

- Are our research institutions producing enough high-quality research to help us develop as a nation or region?

- Are they producing enough master’s or PhD graduates to staff other tertiary education institutions (as well as other sectors in society)?

The rankings steer universities away from these key endeavours. An obsession with ranking creates perverse incentives to act to improve a rank rather than get on with the worthwhile work of universities.

What to do?

We all need to understand that ranking is not objective and true. Profit-driven companies will inevitably drive ranking systems towards making more profit rather than towards the public interest and social functions of universities.

We need ranking institutions to be completely transparent so we can see whether their information is useful and valid. Or in what way the rankings they produce can be used. And they need to acknowledge their inherent conflict.

Once we understand what the rankings are, and what they are not, we will not value their reports as much. This should prompt us to refuse to play the game on their terms.

We need to understand how the way they rank reinforces an (incorrect or incomplete) view of the world that things of high value are western and English speaking. Institutions that claim to be interested in the decolonisation project should be motivated to desist from being ranked.

It’s worth remembering that rankings didn’t always exist. And they don’t have to continue to exist. And should not, in the form they are in now.

- This article is republished from The Conversation under a Creative Commons license. Read the original article.